Technological advances are driving product innovation, creating unprecedented challenges for designers in all industries, and this is particularly evident in the automotive industry. Automakers are working to upgrade the ADAS-enabled L2 autonomous driving technology to L3 and L4, and eventually to develop AI-based systems to SAE Level 6 in the field of autonomous driving. Level 3 passenger cars are already on the road in several parts of the world, and Level 4 autonomous taxis are currently in extensive trials on city streets such as San Francisco (and in some areas are already in commercial use). However, a number of commercial, logistical and regulatory challenges remain before these vehicles can be brought to market on a large scale.

One of the challenges for automakers is to bridge the gap between a proof of concept with a few technical examples and the ability to turn the concept into robust, repeatable and practical mass manufacturing and deployment. The latter requires stable design, security, reliability, and reasonable pricing.

When doing a proof of concept, there are different ways to achieve the goal. The "top-down" approach is to integrate as much hardware, sensors, and software as possible into the vehicle, and then achieve performance, cost, and weight goals by achieving convergence and reducing complexity. In contrast, the "bottom-up" approach is more structured, with automakers completing one level of autonomous driving design before gathering data and overcoming the challenges necessary to get to the next level. The latter method is increasingly favored by large Oems.

The design transition is gradually upgraded from L2 to L3 to L4

For cars to deliver advanced ADAS systems and ultimately drive themselves, they must sense their surroundings and then act on "what they see." The more accurate the vehicle's perception of the environment, the better decisions it can make and the safer it is to drive. Therefore, when designing an autonomous vehicle, the first step is to determine the number and type of sensors deployed around the vehicle. In terms of environmental sensing, there are three common technologies: image sensors, i.e. cameras, millimeter-wave radar and Lidar, each of which has advantages and disadvantages.

In the above three kinds of sensors, it is obvious that the algorithmic processing of cameras and lidar has been generally in the central domain control, and all kinds of millimeter wave radar on the market at present are usually front-end processing, generating targets and then sent to the central domain control, rather than central centralized processing. In this article, we will focus on the necessity of centralized processing for 4D imaging MMwave radar and the technical advantages of Amba CV3 in this regard.

Once the vehicle's sensor needs have been identified, a number of key decisions have to be made, including designing the system architecture and choosing to equip it with the right processor. This requires a fundamental consideration of whether to process sensor data centrally or on the front end.

Figure 1: Determining the sensor architecture is a prerequisite for the success of autonomous vehicles

Although the traditional 3D radar technology is low in cost, there are some shortcomings in perception ability. Usually, the MMwave radar does front-end calculation, generates the target, and fuses it with the perception results from the camera in the domain control. This way, because of the information loss at the front end, not only makes the role of 3D radar greatly weakened, but also in today's highly developed visual perception, traditional 3D radar has a tendency to be sidelined.

With the evolution of millimeter wave radar technology, we can see that the technical indicators of 4D imaging millimeter wave radar have been greatly improved compared with traditional 3D millimeter wave radar, including an additional height dimension, seeing farther, more dense point clouds, better angular resolution, more reliable detection of stationary targets, and lower false detection and missing detection.

The reason why the 4D imaging millimeter wave radar has these technical advantages often comes from more complex modulation technology, and more complex point cloud algorithms, tracking algorithms, and so on. Therefore, 4D imaging millimeter wave radar often requires a special radar processing chip to achieve the required high performance. At present, there are some designs in the market that add radar DSP or FPGA to the front-end radar module for front-end calculation. Although some of these front-end computing 4D imaging MMwave radars show better performance than traditional 3D radars, the higher cost is not conducive to widespread application.

In the centralized processing method, because the raw data of all sensors are combined in a central point, the data is merged without losing key information. Because it is not processed at the front end, the MMwave radar sensor module is greatly simplified, which reduces the size, power consumption and cost. In addition, most millimeter-wave radars are located behind the bumper of the vehicle, minimizing repair costs after an accident.

The centralized approach also gives developers the flexibility to adjust the relative importance of millimeter wave radar data and camera data in real time to provide optimal environmental awareness in a variety of ambient weather and driving conditions. For example, when driving along a highway in bad weather conditions, millimeter wave radar data will be more advantageous; When driving slowly in crowded cities, cameras will play a more important role in recognizing lane lines, reading road signs and scene perception, and identifying dangers. While LiDAR is more characteristic for general obstacle detection and night AEB, dynamically configured sensor suites can save processor resources, reduce power consumption, and improve environmental awareness and safety.

Comparison between front end processing and central domain control processing for 4D imaging millimeter wave radar

Front end processing imaging radar

1. Limited computing power, greater computing power brings greater power consumption, affecting radar data density and sensitivity

2. Fixed calculation mode must be considered for the worst scenario, although it may not be used in common scenarios

3. Higher radar front-end cost, because the radar data processing is placed in the front-end node, increasing the cost

4. Sensor fusion is too simple and can only be used at the target level

Central processing domain controlled radar

1. For more powerful and efficient centralized processing, radar can obtain better angular resolution, data density and sensitivity

2. The computing power can be dynamically adjusted between several radars according to the scene to achieve better computing power utilization and improve the perception result

3. Lower radar front end cost, because the front end of the radar only has sensors, no computing unit

4. Can do the deep fusion of 4D radar data and camera data depth

SoC selection

With the popularity of electric vehicles, how to save energy and maximize "range per charge" becomes a key consideration for every vehicle component. Energy saving is the advantage of centralized AI domain control chips. Some multi-chip central domain controllers consume a lot of power, thus shortening the vehicle's driving range. If the heat generated by the SoC is too large, active cooling schemes need to be designed, and even some architectures require liquid cooling, which greatly increases the size, cost and weight of the car, thereby reducing the battery life.

AI intelligent driving software is rapidly becoming a key element of the system, and the way AI is implemented has a significant impact on the choice of SoC, as well as the time and money invested in developing the system. The key is how to run the latest neural network algorithms with minimal effort and energy, but without sacrificing accuracy. This requires careful consideration not only of how the hardware implements neural networks, but also of providing support for middleware, device drivers, and AI tools to reduce development time and risk.

After the vehicle leaves the factory, whether it is to solve a problem or to add new features, the software needs to be continuously updated. A centralized architecture based on a single domain controller simplifies this process, and the implementation of OTA upgrades solves the current challenge of updating the software of each front-end module individually (which is more expensive and complex). This OTA approach also means that system cybersecurity is another important area to address during the design process.

The choice of SoC affects every aspect of the design process, including the performance of the entire autonomous vehicle. In order to enable large OEMs to land highly cost-effective intelligent driving products faster, comprehensively consider customer needs, and bring customers a large computing power central domain control AI chip CV3-AD685 that focuses on L3/L4 flagship intelligent driving system, which can realize centralized processing and deep integration of the original data of 4D imaging millimeter wave radar.

Why can Amba Aoku radar achieve lower cost?

This is because the traditional 4D imaging MMwave radar uses a fixed modulation technology and needs to compromise performance according to the modulation scheme. However, emerging 4D imaging millimeter-wave radar designs using AI-based real-time dynamic waveforms are helping to solve this challenge. Combining a "sparse array antenna" with an AI algorithm that dynamically learns and ADAPTS to the environment, known as virtual aperture imaging (VAI) technology, fundamentally breaks the performance tradeoff of modulation and increases the resolution of a 4D imaging millimeter-wave radar by up to 100 times. This greatly improves the angular resolution, as well as system performance and accuracy, while the number of antennas is also reduced by a notch, and the form factor, power budget, data transmission requirements and cost are correspondingly reduced.

How to design a central domain control radar on CV3?

The CV3 supports the direct transmission of RAW data from the radar front-end sensor to the domain controller, while the necessary radar calculations including high-quality point cloud generation, processing, tracking and other algorithms are completed on the CV3. The CV3-AD685 is equipped with a dedicated 4D imaging mmwave radar processing hardware unit, which is simple and efficient to complete data processing when multiple radars are working simultaneously.

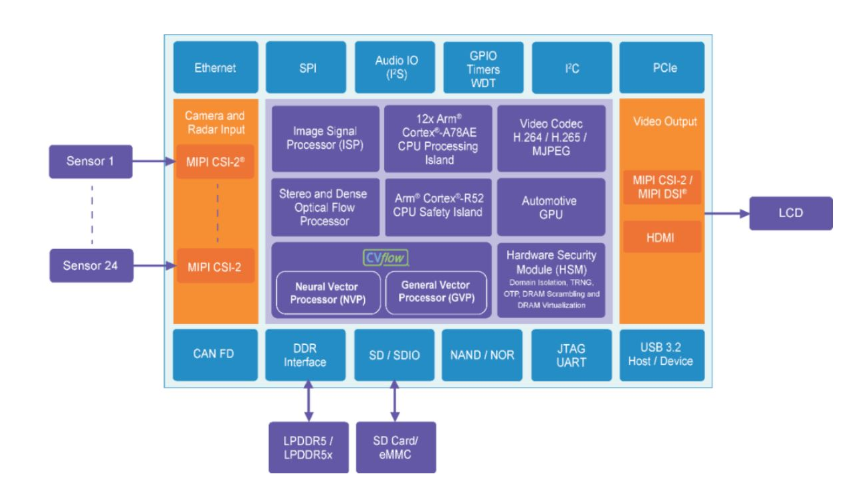

Figure 3 shows a powerful SoC block diagram, which is Amba's CV3-AD685. The SoC is designed for automotive central domain controllers to run a variety of neural network algorithms at high performance, enabling a complete autonomous driving solution. The CV3-AD685 has a professional image signal processing unit, can sense using various sensors (such as from cameras, millimeter wave radar, ultrasonic radar and lidar), perform deep fusion of multiple sensors, and run algorithms such as vehicle path predictive planning control. The architecture of the CV3-AD series, designed specifically for automotive intelligent driving, is very different from the architectures of competing chips such as Gpus, which are generally designed with more general parallel computing to run a variety of different applications. Therefore, when running intelligent driving applications, CV3 is more efficient and consumes less power than competing products.

Figure 3:Ambarella CV3-AD685 SoC block diagram, a chip designed for centralized AI domain control in automotive applications

As shown in Figure 3, the SoC integrates a neural network processor (NVP), a general-purpose vector processor (GVP) for accelerating general-purpose machine vision algorithms and millimeter-wave radar processing, a high-performance image signal processor (ISP), 12 Arm® Cortex®-A78AE, and multiple R52 cpus. Binocular stereo vision and dense optical flow engines, as well as Gpus (for 3D rendering such as AVM). The overall architecture of the CV3-AD series is the same as that of the CV3-AD685, which is suitable for intelligent driving systems from L2+ to L4, with sufficient computing power and safety redundancy to run complete autonomous driving solutions. Its AI performance is about three times that of high-performance Gpus, and despite its superior performance, this SoC operates at significantly lower power consumption than competing products. Therefore, with the CV3-AD685, compared with competing chips, the range of electric vehicles can be increased by at least 30 kilometers with the same battery capacity. In addition, in the case of maintaining the same range, the battery cost can be greatly reduced, and the battery weight can be reduced by several kilograms.

Conclusion

In recent years, intelligent driving technology has made a qualitative leap forward. Different advanced features of intelligent driving have been mainstream for some time, and other new features are emerging as automakers continue to innovate and break through. The challenge now facing the automotive industry is how to bring the current Level 3 and Level 4 intelligent driving test vehicles into full production.

The key to this advancement lies in the choice of sensors, including the architecture of the vehicle and the choice of domain control chips. Using processors designed for centralized sensor fusion, and innovative AI-based technologies such as sparse mmwave radar arrays, autonomous vehicles can centrally process mmwave radar data and fuse it with camera data to respond to the dynamic environment around the vehicle. This increase in performance and sensitivity can reduce the dependence on LiDAR, further reduce costs, and achieve better environmental sensing performance.

About US

Heisener Electronic is a famous international One Stop Purchasing Service Provider of Electronic Components. Based on the concept of Customer-orientation and Innovation, a good process control system, professional management team, advanced inventory management technology, we can provide one-stop electronic component supporting services that Heisener is the preferred partner for all the enterprises and research institutions.